The data loss prevention (DLP) landscape has taken a long while to catch up with the realities of the public cloud. In this post, we’ll explain why this tooling, developed in the on-premises era, struggles to adapt to the dynamic nature and unique characteristics of cloud environments. We’ll then suggest an alternative framework for designing cloud DLP based on five core components.

A Quick Definition of DLP

Data loss prevention (DLP) is a security strategy and set of associated tools used to protect organizations from data breaches and other threats to sensitive data. DLP provides a crucial piece to the cybersecurity puzzle because most organizations store data that must remain private to comply with regulations, protect customers' data privacy, or prevent trade secrets from leaking.

How It Used to Work

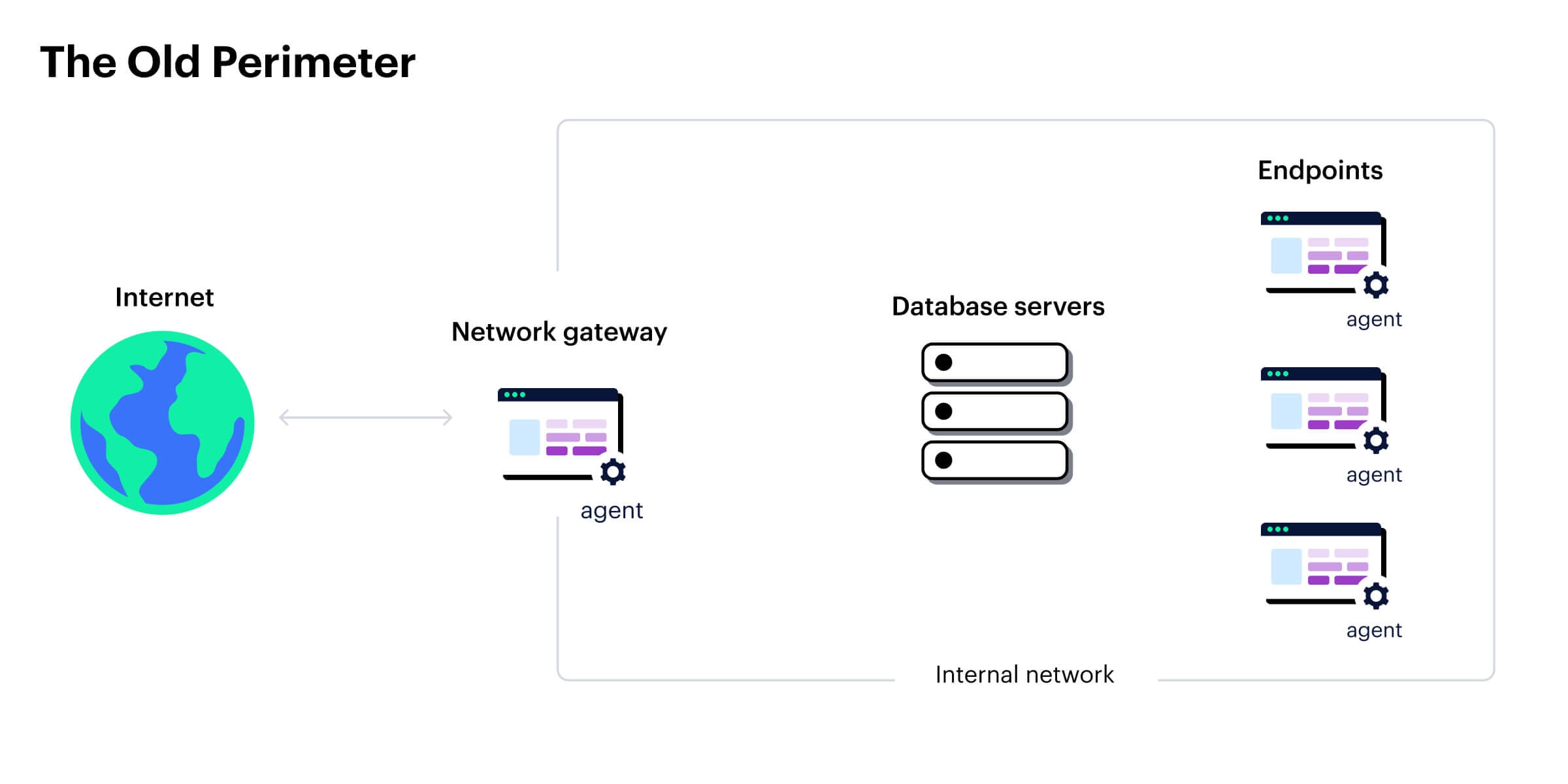

Traditional approaches to DLP were developed when organizations stored data on their physical server infrastructure, and data movement was restricted to internal networks. This was the perimeter that needed securing — and DLP tools would detect sensitive data and block attempts to exfiltrate it. The simplest way to do this was through monitoring the network and using agent-based solutions. In other words, software was installed on servers and endpoints to continuously monitor data and user activity.

An agent had — and still has — the advantage of seeing everything.

- Misconfigurations, such as unused open ports

- Policy violations, such as unencrypted data

- Suspicious endpoint activity, such as a thumb drive inserted into a laptop connected to the VPN

DLP tools scan data records at rest in company databases, detecting and classifying sensitive data. They also monitor data in motion across the corporate network and all known endpoints, identifying breaches or leaks in real time and alerting security teams for prompt remediation.

How the Cloud Changed Everything

As with many aspects of software development, the twin forces of cloud adoption and digital transformation have shaken up DLP and created a need for new types of solutions. The cloud challenges traditional approaches to DLP in four areas.

More Data to Secure

Organizations want to collect, retain, and process more data than ever, and the cloud’s elasticity and ease of use enable them to do so with minimal IT overhead. Competitive pressures have created a sense of urgency to accelerate data innovation, which leads to a business environment that’s supportive of new data initiatives — and these come with additional storage, analytics and reporting requirements.

Complexity and Constant Flux

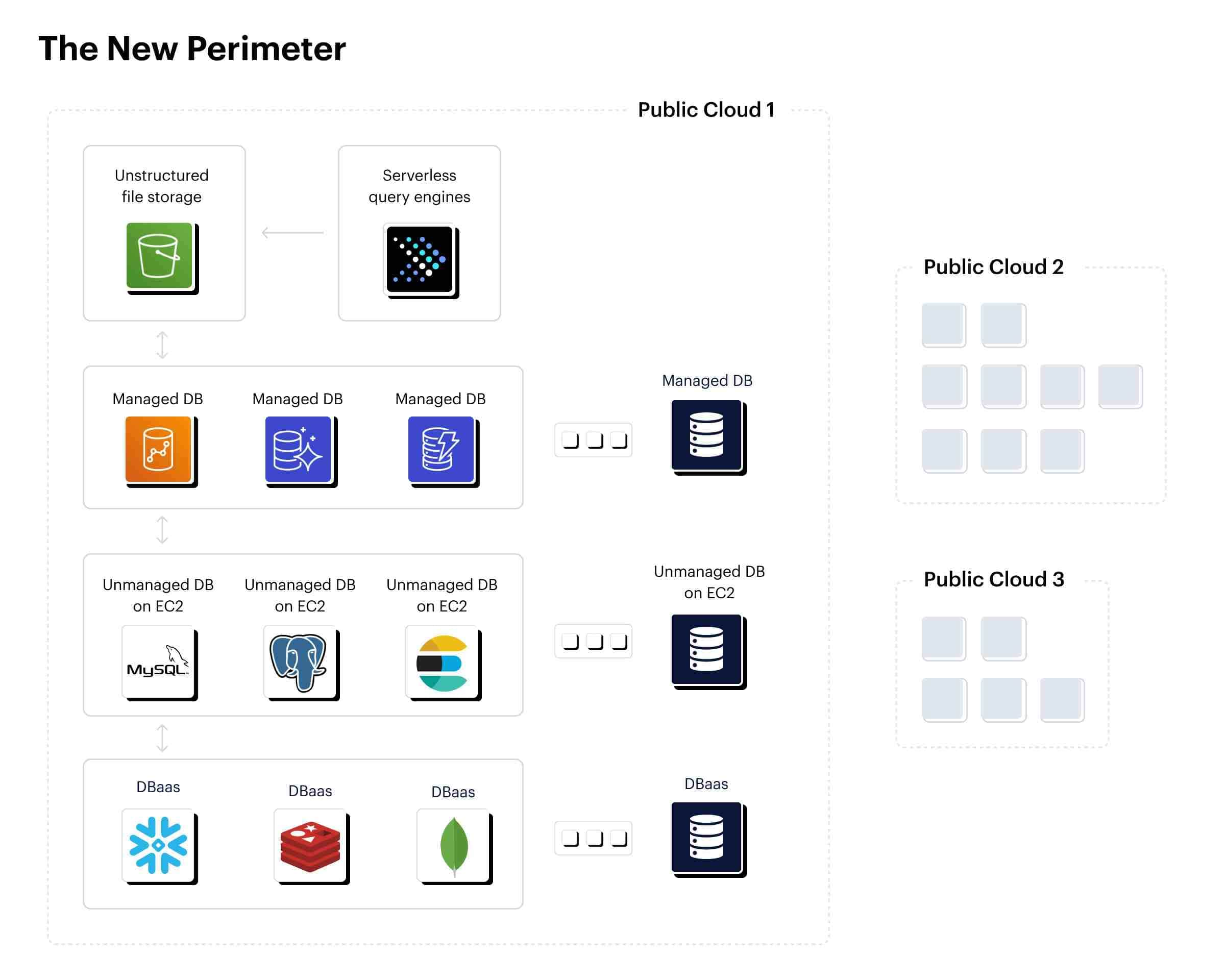

Rather than securing the corporate network as a single perimeter and the enterprise data warehouse as the main destination for analytical processing, data is now spread across a multitude of private and public cloud services.

These services can be spun up and down as needed, and new ones can be added at any time. Data itself is constantly moving between datastores, and it’s all but impossible to predict the flow of data in advance.

Agent-Based Solutions Aren’t Always Cloud Compatible

The cloud abstracts infrastructure behind interfaces (PaaS, DBaaS) or declarative scripting (IaaC). In most of these situations, the organization doesn’t have access to the physical hardware — meaning it can’t install software on the machines that store and process data. Tooling available from the cloud service providers can only provide a partial picture — particularly in multicloud deployments.

Even in cases where it’s technically possible to use agent-based solutions (such as IaaS), the pace in which new servers and clusters are added makes this type of monitoring unmanageable due to the quantity and volatility of assets.

Overwhelmed Security Teams

Security teams must keep track of myriad services, configurations and data flows while attempting to maintain a holistic view of the cloud environment. With their resources stretched, security teams struggle to stay on top of every alert and notification.

The cloud has taken DLP steps backwards, moving from a mature ecosystem of end-to-end data security tools to a fragmented patchwork of APIs, policies, and tools. Most organizations today deal with individual solutions for specific aspects of data security, such as data classification on Amazon S3, data protection in Snowflake and Purview on Azure. Security teams face the challenge of integrating these disparate systems into a cohesive and consistent security strategy.

Components of a DLP Solution for the Public Cloud

Organizations will never give up on data security — nor accept the massive financial and reputational risks of a data breach. But the industry is aware of the dilemma, and a new type of cloud data security solution has emerged. This solution, designed to address the unique characteristics of the public cloud, avoids the limitations of legacy counterparts. This of course means a different design from the ground up, built around five core components.

1. Agentless Data Discovery in Fractured, Complex Environments

Cloud DLP solutions need to address the reality of modern cloud deployments, which are no longer built around a monolithic data platform such as an Oracle data warehouse. Instead, organizations rely on a diverse combination of best-of-breed tools to satisfy the analytical requirements of different teams and shorten time to value from data initiatives. Across teams and business units, an enterprise might be managing dozens of data services and thousands of data assets. And as data teams adopt principles from microservices-based development, we’re likely to see an even more fractured data stack in the future — to say nothing of a higher potential for shadow data.

A cloud DLP tool needs to automate the legwork involved with discovering sensitive data in managed and unmanaged databases, as well object storage such as Amazon S3. Since agent-based solutions aren’t fit for purpose, they’ll need an agentless solution.

Instead, modern cloud DLP would use APIs, log analysis or other means to retrieve a representative sample of the data, scan it for sensitive records, and perform further analytical operations. This must be done without disrupting production and, for security reasons, without moving data to an external cloud account.

2. Data Classification and Inventory

Once the data is discovered, it needs to be classified according to the organization’s data security policies. This could include data that comes with specific regulatory requirements such as PII, PCI or PHI, as well as custom sensitive fields such as customer IDs or product codes.

At the end of the classification process, the security team should have an inventory of all sensitive data residing in its cloud account, including shadow data on cloud object storage or unmanaged datastores. They then gain the ability to prioritize risks and policy violations based on the data's content and context.

3. Data-Aware Posture and Static Risk Analysis

A cloud DLP tool needs to continuously monitor the cloud account for changes in data flows, misconfigurations and new services added to the environment. This includes a posture analysis of the account — a real-time check of whether the cloud account is set up according to industry and domain-specific best practices, such as encryption, access control or well-defined retention periods.

Taking data context and classification into account allows security teams to focus their posture-hardening efforts on sensitive data assets, rather than attempt to chase misconfigurations across the entire cloud account.

4. Agentless Dynamic Monitoring and Detection

The previous components fall broadly under the umbrella of DSPM. They help organizations understand their data environments and establish a realistic data security strategy. But compared to the previous generation of DLP solutions, one gap remains — the ability to detect and respond to critical incidents in real time.

To provide a solution for real-time monitoring, cloud DLP tools need to include data detection and response (DDR) capabilities. Similar to agent-based tools, these solutions can then identify records being exfiltrated and detect suspicious user activity, such as a sudden spike in API calls or a user logging in from a new location. By applying real-time predictive analytics to logs generated by cloud providers, cloud DLP can offer a solid level of real-time protection without requiring agent installation.

5. A Unified and Up-to-Date Threat Model

Organizations want to continue moving fast and adopting new technologies without having to update their security stack or implementation whenever a new data service is added. Cloud DLP should support this motion by providing and instantly applying a unified threat model to any new component in the data stack — even in multicloud and hybrid cloud environments.

The threat model needs regular updating based on learnings from the latest data breach incidents, attack pathways and vulnerability reports. Cloud DLP providers must supply not just the technological means but also the domain expertise to fine-tune these threat models. The threat model must remain accurate and sensitive enough to surface critical threats — without contributing to further notification overload.

Learn More

DSPM with data detection and response (DDR) offers critical capabilities previously missing in the cloud security landscape — data discovery, classification, static risk management, and continuous and dynamic monitoring of complex, multicloud environments. Learn how to secure your sensitive data in the cloud with our definitive DSPM resource. Download Securing the Data Landscape with DSPM and DDR.

Prisma Cloud combines DSPM, DDR and an industry-leading threat model to provide end-to-end protection across your multicloud environments.