How do you unleash the competitive edge that comes from game-changing technologies while simultaneously keeping them secure? It’s a burning question for security professionals looking for ways to embrace AI usage across their organizations. Employees are adopting AI applications at an unprecedented rate, and the players in every major industry are gaining a competitive edge by introducing their own AI-powered applications. The challenge? This adoption of AI comes with an expanded attack surface that must be secured. To meet the urgency of these needs, we’ve just announced the upcoming availability of three new products powered by Precision AI™.

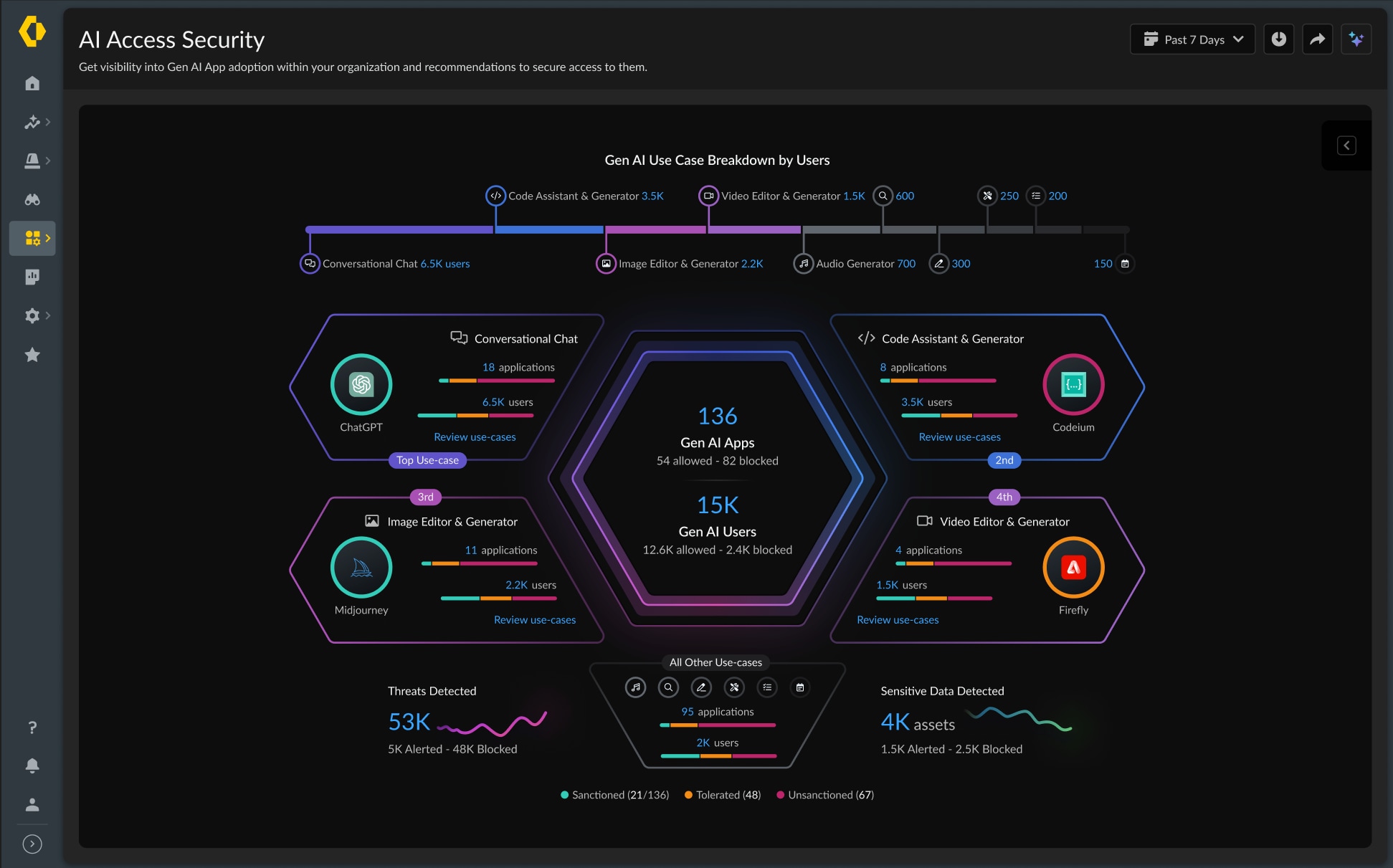

- AI Access Security is designed to eliminate the data and security risks associated with employees accessing and using Generative AI (Gen AI) applications.

- AI Security Posture Management (AI-SPM) enables rapid Gen AI application development by reducing risk in the AI application stack and supply chain.

- AI Runtime Security gives you runtime protection against new attacks targeting your AI ecosystem.

Public preview and general availability of these platform components are slated to be announced soon. In the meantime, here’s a sneak preview of what’s to come:

Safeguarding Employee AI Adoption Becomes Essential

It’s important to note that, for all their extraordinary capabilities, AI-powered applications and large-language models (LLMs) open up new data security issues and expand the attack surface. As adoption snowballs, these applications become a more enticing, and more profitable, target for attackers. A recent Salesforce survey of over 14,000 workers found that 55% of employees use unapproved Gen AI at work and, with dozens of new AI applications being launched every month, it’s only a matter of time before there are AI applications for every employee and every use case. This is a new type of shadow IT usage, which can expose your organization to data leakage and malware. At the same time, according to TechTarget’s Enterprise Strategy Group, 85% of businesses have proprietary LLMs planned or already built into products generally available to their customers. There’s no going back.

This revolutionary evolution of technology also means that shadow IT is morphing into shadow AI. People tend to gravitate toward what’s convenient, which can make things really inconvenient for maintaining a robust security posture. But with AI Access Security, information security professionals will gain visibility and control over hundreds of third-party AI applications, prevent sensitive data leaks with comprehensive data classification capabilities and continue to secure their devices, applications and networks against threats originating from insecure or compromised AI platforms.

Visibility Fortifies AI Supply Chain Integrity

Of course, employee use of third party AI isn’t the only way AI is making its way into the enterprise. Innovative organizations realize they can improve both their top and bottom line by supercharging their own applications with AI. As that happens, new AI components get added to application stacks, which increases the potential for exposure of sensitive data via training datasets and inference datasets. Addressing this means infusing security into both the AI supply chain and AI runtime.

Reducing security risks in the AI development supply chain will be increasingly important to our customers as they need to identify vulnerabilities and exposure in their AI-based applications. AI-SPM helps infosec teams, product managers and developers discover newfound visibility into AI application code, models and associated resources. By identifying and tracing the lineage of AI components and data used in building new applications, this Prisma Cloud module will go a long way toward avoiding the introduction of vulnerabilities in the AI development supply chain. To reduce data exposure — as well as misconfigurations and excessive access — AI-SPM includes comprehensive model risk analysis features. All this will add up to being able to defend against the exposure of sensitive data by classifying the entire AI stack with data security across model resources.

Using AI Protects the Entire AI Ecosystem at Runtime

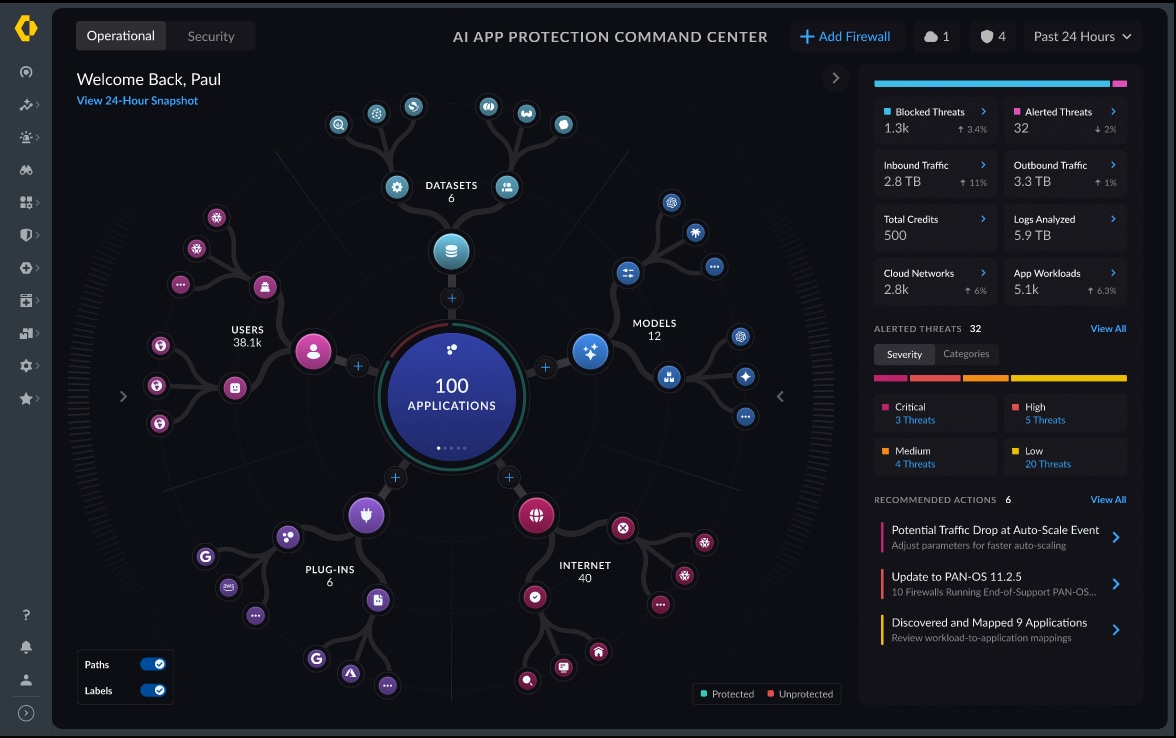

To protect AI apps, models and data from the brand-new threats to AI ecosystems at runtime, we’re introducing AI Runtime Security to meet the challenges of prompt injections, malicious responses, LLM denial-of-service, training data poisoning — as well as foundational runtime attacks, such as malicious URLs, command and control and lateral threat movement. Self-learning AI runtime security capabilities protect all AI applications, models and data with no code changes. With these capabilities in place, AI Runtime Security offers comprehensive protection for all AI-specific attacks with AI Model Protection, AI Application Protection and AI Data Protection. AI Runtime Security is being designed to prevent direct and indirect prompt injections, model denial of service (DoS) and training data from tampering or poisoning, all while blocking sensitive data extraction.

Palo Alto Networks Secures Enterprise Adoption of AI

With the explosion of AI adoption, the network and data security attack surface is growing significantly. At Palo Alto Networks, as leaders and early adopters of AI in network security, we understand how this impacts our customers' security posture. But it’s a new day and a brand-new fight, and we’re here to help our customers in making the most of securing AI and safely increasing their innovation. To find how to become an early adopter, find the details here.

This blog contains forward-looking statements that involve risks, uncertainties and assumptions, including, without limitation, statements regarding the benefits, impact, or performance or potential benefits, impact or performance of our products and technologies. These forward-looking statements are not guarantees of future performance, and there are a significant number of factors that could cause actual results to differ materially from statements made in this blog. We identify certain important risks and uncertainties that could affect our results and performance in our most recent Annual Report on Form 10-K, our most recent Quarterly Report on Form 10-Q, and our other filings with the U.S. Securities and Exchange Commission from time-to-time, each of which are available on our website at investors.paloaltonetworks.com and on the SEC's website at www.sec.gov. All forward-looking statements in this blog are based on information available to us as of the date hereof, and we do not assume any obligation to update the forward-looking statements provided to reflect events that occur or circumstances that exist after the date on which they were made.