This post is part of an ongoing blog series examining “Sure Things” (predictions that are almost guaranteed to happen) and “Long Shots” (predictions that are less likely to happen) in cybersecurity in 2017.

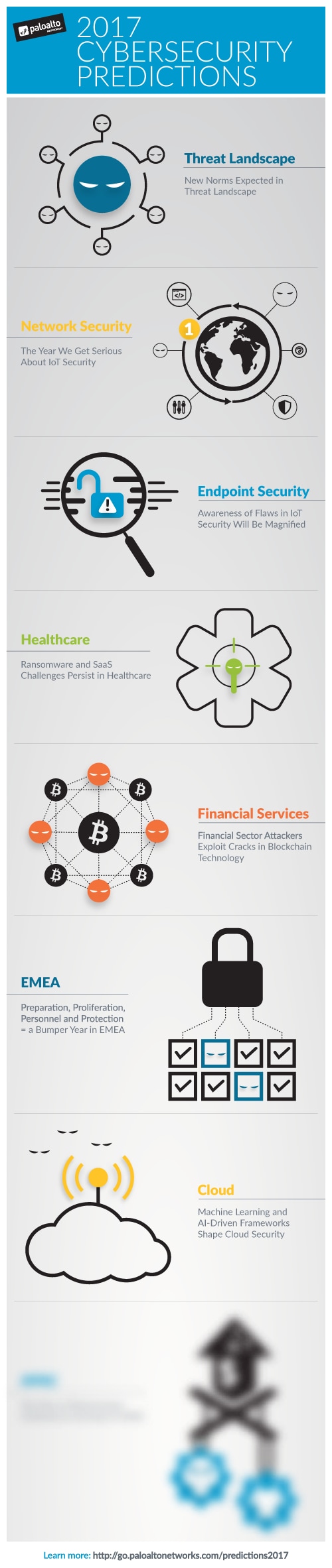

Here’s what we predict for cloud in 2017:

Sure Things

A multi-cloud, hybrid security strategy will be the new normal among InfoSec teams

In the last few years, the digital footprint of organizations has expanded beyond the confines of the on-premise data center and private cloud to a model that now incorporates SaaS and public clouds. To date, InfoSec teams have been in a reactive mode while trying to implement a comprehensive security strategy across their hybrid architecture. In 2017, we will see a concerted effort from InfoSec teams to build and roll out a multi-cloud security strategy geared toward addressing the emerging digital needs of their organizations. Maintaining a consistent security posture, pervasive visibility, and ease of security management across all clouds will drive security teams to extend their strategy beyond security considerations for public and private clouds and also focus on securely enabling SaaS applications.

Shifting ground within data privacy laws will impact cloud security choices

Cross-border data privacy laws play a significant role while considering cloud computing options for organizations across the globe. With recent developments, such as Brexit and the expansion of cross-border data flow restrictions in Asia-Pacific, IT security leaders will look for flexibility and adaptability from their cloud security vendors in 2017. Cloud security offerings need to address the diversity among clouds, enforce consistent security policy, and adapt to the data privacy laws of the resident nation-state. The WildFire EU cloud is a great example of enabling regional presence to comply with local data residency requirements. It is a global, cloud based, community-driven threat analysis framework that correlates threat information and builds prevention rulesets that can be applied across the public, private and SaaS footprint of organizations based out of Europe.

Large-scale breach in the public cloud

The excitement and interest around utilizing the public cloud reminds us of the early days of the Internet. Nearly every organization we talk to is using or looking to use either Amazon Web Services (AWS) or Microsoft Azure for new projects. And it is based on this observation that we predict a security incident resulting in the loss of data stored in a public cloud will garner international attention. The reality is that, given the volume of data loss over the past year, one or more successful breaches has likely occurred already, but the specific location (private, public, SaaS) of where the data was located is rarely, if ever, disclosed. But that is bound to change as more companies move their business-critical applications to the public cloud.

The basis of the prediction is twofold. Public cloud vendors are more secure than most organizations, but their protection is for underlying infrastructure, not necessarily the applications in use, the access granted to those applications, and the data available from using those applications. Attackers do not care where their target is located. Their goal is to gain access to your network; navigate to a target, be it data, intellectual property or excess compute resources; and then execute their end goal – regardless of the location. From this perspective, your public cloud deployment should be considered an extension of your data center, and the steps to protect it should be no different than those you take to protect your data center.

The speed of the public cloud movement, combined with the “more secure infrastructure” statements, is, in some cases, leading to security shortcuts where little to no security is being used. Too often we hear from customers and prospects that the use of native security services and/or point security products is sufficient. The reality is that basic filtering and ACLs do little to reduce the threat footprint, whereas opening TCP/80, TCP/443 allows nearly 500 applications of all types including proxies, encrypted tunnels and remote access applications. Port filtering is incapable of preventing threats or controlling file movements, improving only slightly when combined with detect and remediate point products or those that merely prevent known threats. It is our hope that, as public cloud projects increase in volume and scope, more diligence is applied to the customer piece of the shared security responsibility model. Considerations should include complete visibility and control at the application level and the prevention of known and unknown threats, with an eye toward automation to take what has been learned and use it to continually improve prevention techniques for all customers.

Long Shots

Autonomic Security: Rise of artificial intelligence and machine learning-driven security frameworks

2016 introduced self-driven cars and selfie drones to consumers. The technology behind these innovations was heavily driven by artificial intelligence (AI) and machine learning (ML). AI and ML usage within cybersecurity is not new. Cybersecurity vendors have been leveraging them for threat analysis and big data challenges posed by threat intelligence. But, the pervasive availability of open source AI/ML frameworks and automation simplicity associated with them will redefine the security automation approaches within InfoSec teams. Today, security automation is about simplifying and speeding up monotonous tasks associated with cybersecurity policy definition and enforcement. Soon, artificial intelligence and machine learning frameworks will be leveraged by InfoSec teams for implementing predictive security postures across public, private and SaaS cloud infrastructures. We are already seeing early examples that reflect the above approach. Open source projects, such as MineMeld, are shaping InfoSec teams’ thinking on leveraging externally sourced threat data and using it for self-configuring security policy based on organization-specific needs. In 2017 and beyond, we will see the rise of autonomic approaches to cybersecurity.

Insecure API: Subverting automation to hack your cloud

Application programming interfaces (APIs) have become the mainstay for accessing services within clouds. Realizing the potential problems associated with traditional authentication methods and credential storage practices (hard-coded passwords anyone), cloud vendors have implemented authentication mechanisms (API keys) and metadata services (temporary passwords) as alternatives that streamline application development. The API approach is pervasive across all cloud services and, in many cases, insecure. It provides a new attack vector for hackers, and in 2017 and beyond, we will hear about more breaches that leverage open, insecure APIs to compromise clouds.

What are your cybersecurity predictions around cloud and cloud security? Share your thoughts in the comments and be sure to stay tuned for the next post in this series where we’ll share predictions for Asia-Pacific.